As reported by ARSTechnica: In the future, the US Air Force hopes to have armed drones flying in formation with human pilots, responding to their verbal and digital commands to fight the enemy and strike targets. That would require an artificial intelligence capable of interpreting commands and applying knowledge of combat tactics—something that is already being proven in a project funded by the Air Force Research Lab.

ALPHA, an artificial intelligence trained by a retired Air Force expert in air combat, was originally developed as what amounts to ultimate video game AI—an autonomous simulated enemy for use in training fighter pilots. The AI is so good that it has consistently beaten human pilots in simulated air combat—even when heavily handicapped by simulated physics. And now AFRL is investigating using ALPHA as the AI for Unmanned Combat Aerial Vehicles (UCAVs) in the physical world, potentially flying missions alongside human pilots.

ALPHA, an artificial intelligence trained by a retired Air Force expert in air combat, was originally developed as what amounts to ultimate video game AI—an autonomous simulated enemy for use in training fighter pilots. The AI is so good that it has consistently beaten human pilots in simulated air combat—even when heavily handicapped by simulated physics. And now AFRL is investigating using ALPHA as the AI for Unmanned Combat Aerial Vehicles (UCAVs) in the physical world, potentially flying missions alongside human pilots.

ALPHA, an artificial intelligence trained by a retired Air Force expert in air combat, was originally developed as what amounts to ultimate video game AI—an autonomous simulated enemy for use in training fighter pilots. The AI is so good that it has consistently beaten human pilots in simulated air combat—even when heavily handicapped by simulated physics. And now AFRL is investigating using ALPHA as the AI for Unmanned Combat Aerial Vehicles (UCAVs) in the physical world, potentially flying missions alongside human pilots.

ALPHA, an artificial intelligence trained by a retired Air Force expert in air combat, was originally developed as what amounts to ultimate video game AI—an autonomous simulated enemy for use in training fighter pilots. The AI is so good that it has consistently beaten human pilots in simulated air combat—even when heavily handicapped by simulated physics. And now AFRL is investigating using ALPHA as the AI for Unmanned Combat Aerial Vehicles (UCAVs) in the physical world, potentially flying missions alongside human pilots.

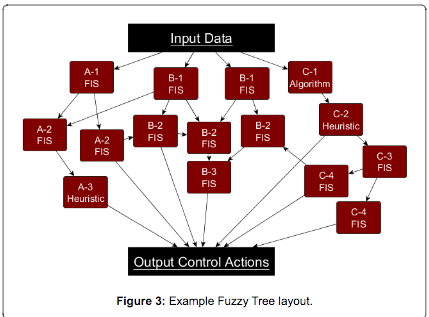

Described in a paper recently published in the Journal of Defense Management, ALPHA was created using a "genetic fuzzy tree" (GFT) system. There's a lot to unpack in that term, but in short, the methodology uses genetic algorithms—code intended to mimic evolution and natural selection—to train a collection of independent but interconnected "fuzzy inference systems" (FISs). Instead of training each bit of fuzzy logic independently for a given task, as is normally done in fuzzy systems, the genetic algorithm "is utilized to train each system in the Fuzzy Tree simultaneously," lead researcher Nick Ernest, CEO of Psibernetix Inc. (the company that developed ALPHA) and his co-authors wrote in the paper. "Each FIS has membership functions that classify the inputs and outputs into linguistic classifications, such as 'far away' and 'very threatening', as well as if-then rules for every combination of inputs, such as 'If missile launch computer confidence is moderate and mission kill shot accuracy is very high, fire missile'. By breaking up the problem into many sub-decisions, the solution space is significantly reduced."

This, Ernest said, closely mirrors how humans make decisions on the fly. "Only considering the relevant variables for each sub-decision is key for us to complete complex tasks as humans," he said. "So, it makes sense to have the AI do the same thing."

The GFT approach and ALPHA were developed by Ernest during his doctoral research in aerospace engineering at the University of Cincinnati during a three-year fellowship funded by the Dayton Area Graduate Institute and the Air Force Research Lab. The tools for creating ALPHA incorporated input from retired Air Force Colonel Gene Lee, a former Air Force air combat instructor, and research and technology from AFRL and from the University of Cincinnati's aerospace professor, Kelly Cohen, and fellow doctoral student Tim Arnett. Before ALPHA earned its wings in simulated combat with humans in AFRL's Advanced Framework for Simulation, Integration, and Modeling (AFSIM) environment, the development team generated scores of random versions of ALPHA that were pitted against a version tuned with human input, running on a $500 desktop PC. The winning versions of the AI were then "bred" with each other, with the best-performing traits carried on to the next generation of ALPHA code. These were then let loose on each other to simulate natural selection. In the end, through subsequent generations of pitting AI versions against each other, only one remains—the alpha ALPHA, so to speak.

In October, Lee took on ALPHA himself as the first human opponent. The former fighter combat instructor scored no kills against ALPHA's simulated aircraft—in fact, every simulated engagement ended in him being shot down. "I was surprised at how aware and reactive it was," Lee said. "It seemed to be aware of my intentions and reacting instantly to my changes in flight and my missile deployment. It knew how to defeat the shot I was taking. It moved instantly between defensive and offensive actions as needed." While most AIs he had encountered in simulations before could be "beat up on" by experienced pilots, he said, "until now, an AI opponent simply could not keep up with anything like the real pressure and pace of combat-like scenarios." After flying multi-hour simulated missions with ALPHA as the opponent, he said, "I go home feeling washed out. I'm tired, drained and mentally exhausted. This may be artificial intelligence, but it represents a real challenge."

EXPAND GALLERY TO FULL SIZE

It's not like ALPHA has been given any special advantages in these simulations. "The [simulated] aircraft have the exact same mechanical capabilities with respect to their mover models," Ernest told Ars in an e-mail. But ALPHA even won when it was given an inferior aircraft to fly—deliberately handicapped with lower speed, shorter missile range, and inferior sensors. "ALPHA has even occasionally been given a lesser G-Limit than the opponent," Ernest said, and it still won. ALPHA has also controlled multiple simulated aircraft at the same time in coordinated combat.

Going forward, AFRL and Psibernetix plan to continue to train ALPHA against other pilots and improve how close to the real world its simulated environment is by making the aerodynamic and sensor models of the simulation more realistic. "The goal is to continue developing ALPHA, to push and extend its capabilities," Ernest said. Eventually, AI like ALPHA could be trained to work in teams with human pilots—and keep humans from making mistakes in combat. According to the researchers, ALPHA can act on sensor data to make or change decisions about combat for up to four aircraft in less than a millisecond, moving aircraft to evade missiles and fire weapons while a human pilot essentially manages the overall air battle at a higher level.

From

From